InterCap: Joint Markerless 3D Tracking of

Humans and Objects in Interaction

Yinghao Huang, Omid Taheri, Michael J. Black, Dimitrios Tzionas

Abstract (IJCV'24)

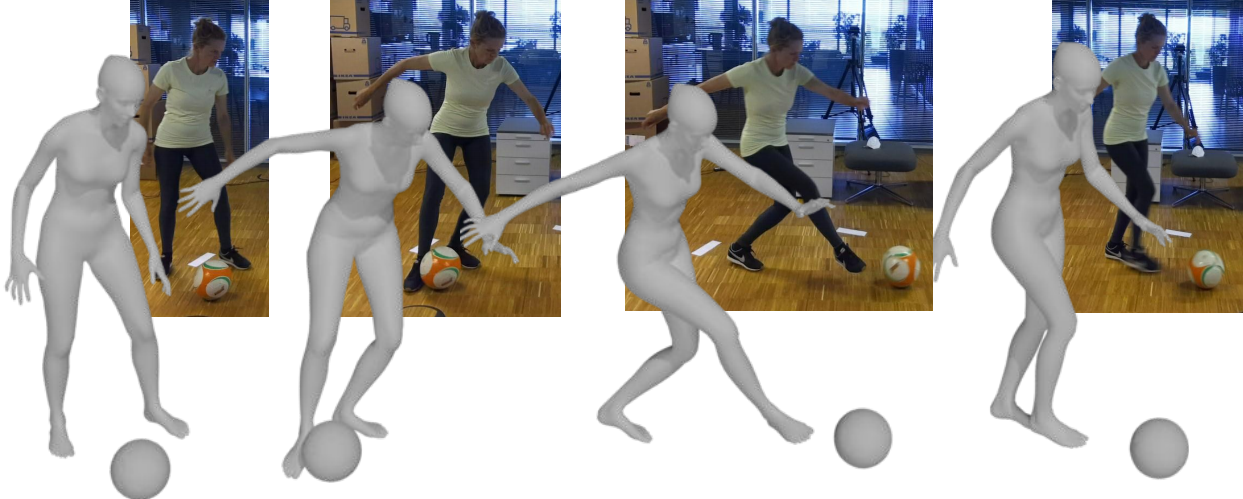

Humans constantly interact with objects to accomplish tasks. To understand such interactions, computers need to reconstruct these in 3D from images of whole bodies manipulating objects, e.g., for grasping, moving and using the latter. This involves key challenges, such as occlusion between the body and objects, motion blur, depth ambiguities, and the low image resolution of hands and graspable object parts. To make the problem tractable, the community has followed a divide-and-conquer approach, focusing either only on interacting hands, ignoring the body, or on interacting bodies, ignoring the hands. However, these are only parts of the problem. On the contrary, recent work focuses on the whole problem. The GRAB dataset addresses whole-body interaction with dexterous hands but captures motion via markers and lacks video, while the BEHAVE dataset captures video of body-object interaction but lacks hand detail. We address the limitations of prior work with InterCap, a novel method that reconstructs interacting whole-bodies and objects from multi-view RGB-D data, using the parametric whole-body SMPL-X model and known object meshes. To tackle the above challenges, InterCap uses two key observations: (i) Contact between the body and object can be used to improve the pose estimation of both. (ii) Consumer-level Azure Kinect cameras let us set up a simple and flexible multi-view RGB-D system for reducing occlusions, with spatially calibrated and temporally synchronized cameras. With our InterCap method we capture the InterCap dataset, which contains 10 subjects (5 males and 5 females) interacting with 10 daily objects of various sizes and affordances, including contact with the hands or feet. To this end, we introduce a new data-driven hand motion prior, as well as explore simple ways for automatic contact detection based on 2D and 3D cues. In total, InterCap has 223 RGB-D videos, resulting in 67,357 multi-view frames, each containing 6 RGB-D images, paired with pseudo ground-truth 3D body and object meshes. Our InterCap method and dataset fill an important gap in the literature and support many research directions. Our data and code are available for research purposes.

Youtube Video

Citation

@article{huang2024intercap,

|

Contact

For commercial licensing, please contact ps-licensing@tue.mpg.de

Acknowledgements

We thank Chun-Hao Paul Huang, Hongwei Yi, Jiaxiang Shang, as well as Mohamed Hassan for helpful discussion about technical details. We thank Taylor McConnell, Galina Henz, Marku Hoschle, Senya Polikovsky, Matvey Safroshkin, Yuliang Xiu, Jinlong Yang and Tsvetelina Alexiadis for the data collection and data cleaning. We thank all the participants of our experiments. We also thank Benjamin Pellkofer for the IT and website support.

The authors thank the International Max Planck Research School for Intelligent Systems (IMPRS-IS) for supporting OT. This work was supported by the German Federal Ministry of Education and Research (BMBF): Tubingen AI Center, FKZ: 01IS18039B.